Initial AI Fact-Checking Experiments Raise Questions.

Over the past month, we began hand-testing commercial large-language models, like ChatGPT and Bard. We asked each some reasonably detailed questions, then scored the results for accuracy and the ability to self-correct. We then totaled up the results to feel our way to qualitative judgments on overall performance. The “head of the class” quickly made itself known.

OpenAI’s GPT-4, while far from perfect, seems to be the large language model that performs best.

But -- like any eighth grader -- GPT-4 had limitations in these trials. The most startling were the moments of near-nonsensical acting out: The model struggled to count the number of boxes in a 3 x 3 grid or divide 17,077 by 7. If a thirteen-year-old was unable to use a calculator to perform the operation 17,077/7, parents would have to be notified about special remediation and tutoring.

This is the AI that is going to destroy humanity?

To explore how machine learning models make such fundamental near-hallucinations, we asked the following question to GPT-4 (the best-performing model from the trials) on counting the number of paths through a 3 x 3 grid:

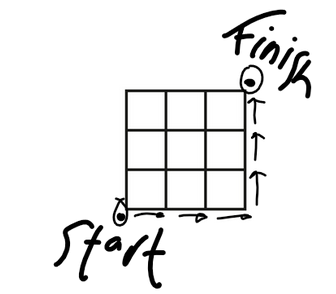

“Imagine a 3 x 3 square grid with borders. The grid has 9 smaller squares in it - all are the same size and all of the borders are vertical, horizontal, and evenly spaced. Imagine we are starting at the bottom left-most corner of the grid. Moving along the borders of the grid -- both the outer perimeter and the borders of the smaller squares in the middle -- how many possible optimal routes are there to reach the top-most right corner of the grid? Assume an "optimal" route means taking no more than the minimum number of steps to get from one corner to the other.” Using our imaginations, we realize that a 3 x 3 grid is exactly that, a grid of three boxes wide and three boxes tall.

- The start point is the bottom-left corner.

- The endpoint is the top right corner.

- We must navigate the entire grid along the solid black lines.

The answer we are looking for is 20.

Lost in the Grid

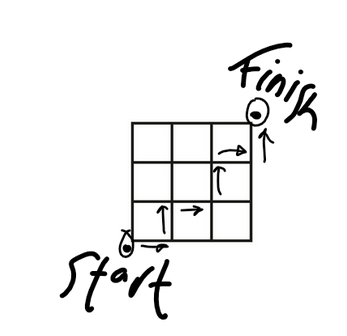

Walking through various routes through the grid reveals that the path that yields the least possible steps is a combination of Up and Right movements.

One such optimal solution would be: Right, Right, Right, Up, Up, Up, for 6 total steps.

But that is only one possible combination of Rights and Ups that finds a path from the bottom left to the top right. Another solution would be Right, Up, Right, Up, Right, Up, as follows.

The curious would take the time to count them all, and realize there would be 18 remaining paths through the grid, for a total of 20.

For the above question, we prompted GPT-4 10 times, each prompt in a different conversation, over the course of two days. This was our attempt to control for the fact that GPT-4 might “memorize” answers across conversations.

Here are the results, if incorrect, the returned answer is included:

Attempt:

- Incorrect - 6

- Incorrect - 6

- Incorrect - 6

- Incorrect - 6

- Correct

- Correct

- Correct

- Incorrect - 924

- Correct

- Correct

Score: 5/10 = 50%. Grade: F

Afterward, we slightly modified the above prompt with the following specifications. It looks nearly identical, but notice the differences between the first prompt and the underlined portions of this prompt:

Imagine a 3x3 square grid with borders. The grid has nine smaller squares in it and one outer square encompassing all the smaller squares. Imagine we are starting at the left-most bottom corner of the grid. Moving along the borders of the grid -- both the outer perimeter and the borders of the smaller squares in the middle -- how many possible optimal routes are there to reach the top-most right corner of the grid? Assume an "optimal" route means taking no more than the minimum number of steps to get from one corner to the other.

Here is how GPT-4 performed:

Attempt:

- Correct

- Correct

- Correct

- Correct

- Incorrect - 4,096

- Correct

- Incorrect - 462

- Incorrect - 20, but the logic was completely wrong.

- Correct

- Incorrect - 400

Score: 6/10 = 60% Grade: D-

Living with Failing Grades

When we first scored the models, we thought we had something exciting: All of the first prompts seemed to be converging to an incorrect answer (6) and all of the second prompts seemed to be converging to a correct answer (20).

But then the responses started to get wacky: GPT-4 suggested 4,096 optimal routes for the second prompt. What is going on?

Why does GPT-4 get the question right about half the time, indicating it has the capabilities to answer a question like this; and it gets it wrong another half of the time, on the exact same prompt? What are the failures that cause GPT-4 to hallucinate some of the time and not hallucinate other times?

We will definitely be investigating why. But, there are still very important takeaways from this experiment: GPT-4 and other LLMs should be used cautiously, and they are nowhere near the point of “replacing humanity” or even some entry-level data analyst jobs.

If GPT-4 truly is the “best” LLM on the market right now, random, inexplicable hallucinations like the ones we observed in this experiment -- and the fact that the model doubles down on its incorrect response when asked to re-evaluate or "think step by step" -- ought to remind us of the limitations of LLMs and of the fact that these tools have a long way to go before users and businesses can rely on them for tasks beyond a simple, one-lined question. Yet, more and more startups and large businesses are relying on foundational models like GPT-4 to build new products and applications. With this in mind, how can we accurately asses the capabilites and limitations of each LLM? Which LLM is best for your business or practical needs?